Upload measurements and run analysis#

Now you will post your measurement data and analysis to the database via the API.

If you are running the tutorials in DoLab, the following instructions are not necessary and you can skip directly to the next cell.

You will need to authenticate to the database with your username and password. To make this easy, you can create a file called .env in this folder and complete it with your organization’s URL and authentication information as follows:

dodata_url=https://animal.doplaydo.com

dodata_user=demo

dodata_password=yours

dodata_db=animal.dodata.db.doplaydo.com

dodata_db_user=full_access

dodata_db_password=yours

If you haven’t defined a .env file or saved your credentials to your environment variables, you will be prompted for your credentials now.

import doplaydo.dodata as dd

import pandas as pd

from pathlib import Path

from tqdm.auto import tqdm

import requests

import getpass

import matplotlib.pyplot as plt

from httpx import HTTPStatusError

username = getpass.getuser()

Let’s now create a project.

In normal circumstances, everyone will be sharing and contributing to a project. In this demo, however, we want to keep your project separate from other users for clarity, so we will append your username to the project name. This way you can also safely delete and recreate projects without creating issues for others. If you prefer though, you can change the PROJECT_ID to anything you like. Just be sure to update it in the subsequent notebooks of this tutorial as well.

PROJECT_ID = f"resistance-{username}"

MEASUREMENTS_PATHS = list(Path("wafers").glob("*"))

MEASUREMENTS_PATHS

[PosixPath('wafers/6d4c615ff105'),

PosixPath('wafers/334abd342zuq'),

PosixPath('wafers/2eq221eqewq2')]

Lets delete the project if it already exists so that you can start fresh.

try:

dd.project.delete(project_id=PROJECT_ID).text

except HTTPStatusError:

pass

New project#

You can create the project, upload the design manifest, and upload the wafer definitions through the Webapp as well as programmatically using this notebook

Upload Project#

You can create a new project and extract all cells & devices below for the RidgeLoss and RibLoss

The expressions are regex expressions. For intro and testing your regexes you can check out regex101

To only extract top cells set max_hierarchy_lvl=-1 and min_hierarchy_lvl=-1

To disable extraction use a max_hierarchy_lvl < min_hierarchy_lvl

Whitelists take precedence over blacklists, so if you define both, it uses only the whitelist.

cell_extraction = [

dd.project.Extraction(

cell_id="resistance",

cell_white_list=["^resistance"],

min_hierarchy_lvl=0,

max_hierarchy_lvl=0,

),

]

dd.project.create(

project_id=PROJECT_ID,

eda_file="test_chip.gds",

lyp_file="test_chip.lyp",

cell_extractions=cell_extraction,

).text

'{"success":19}'

Upload Design Manifest#

The design manifest is a CSV file that includes all the cell names, the cell settings, a list of analysis to trigger, and a list of settings for each analysis.

dm = pd.read_csv("design_manifest.csv")

dm

| cell | x | y | width_um | length_um | analysis | analysis_parameters | |

|---|---|---|---|---|---|---|---|

| 0 | resistance_sheet_W10 | 0 | 52500 | 10.0 | 20 | [iv_resistance] | [{}] |

| 1 | resistance_sheet_W20 | 0 | 157500 | 20.0 | 20 | [iv_resistance] | [{}] |

| 2 | resistance_sheet_W100 | 0 | 262500 | 100.0 | 20 | [iv_resistance] | [{}] |

dm = dm.drop(columns=["analysis", "analysis_parameters"])

dm

| cell | x | y | width_um | length_um | |

|---|---|---|---|---|---|

| 0 | resistance_sheet_W10 | 0 | 52500 | 10.0 | 20 |

| 1 | resistance_sheet_W20 | 0 | 157500 | 20.0 | 20 |

| 2 | resistance_sheet_W100 | 0 | 262500 | 100.0 | 20 |

dm.to_csv("design_manifest_without_analysis.csv", index=False)

dd.project.upload_design_manifest(

project_id=PROJECT_ID, filepath="design_manifest_without_analysis.csv"

).text

'{"success":200}'

dd.project.download_design_manifest(

project_id=PROJECT_ID, filepath="design_manifest_downloaded.csv"

)

PosixPath('design_manifest_downloaded.csv')

Upload Wafer Definitions#

The wafer definition is a JSON file where you can define the wafer names and die names and location for each wafer.

dd.project.upload_wafer_definitions(

project_id=PROJECT_ID, filepath="wafer_definitions.json"

).text

'{"success":200}'

Upload data#

Your Tester can output the data in JSON files. It does not need to be Python.

You can get all paths which have measurement data within the test path.

data_files = [

file

for MEASUREMENTS_PATH in MEASUREMENTS_PATHS

for file in MEASUREMENTS_PATH.glob("**/data.json")

]

print(data_files[0].parts)

('wafers', '6d4c615ff105', '-2_0', 'resistance_resistance_sheet_W20_0_157500', 'data.json')

You should define a plotting per measurement type in python. Your plots can evolve over time even for the same measurement type.

Required:

- x_name (str): x-axis name

- y_name (str): y-axis name

- x_col (str): x-column to plot

- y_col (list[str]): y-column(s) to plot; can be multiple

Optional:

- scatter (bool): whether to plot as scatter as opposed to line traces

- x_units (str): x-axis units

- y_units (str): y-axis units

- x_log_axis (bool): whether to plot the x-axis on log scale

- y_log_axis (bool): whether to plot the y-axis on log scale

- x_limits (list[int, int]): clip x-axis data using these limits as bounds (example: [10, 100])

- y_limits (list[int, int]): clip y-axis data using these limits as bounds (example: [20, 100])

- sort_by (dict[str, bool]): columns to sort data before plotting. Boolean specifies whether to sort each column in ascending order.

(example: {"wavelegths": True, "optical_power": False})

- grouping (dict[str, int]): columns to group data before plotting. Integer specifies decimal places to round each column.

Different series will be plotted for unique combinations of x column, y column(s), and rounded column values.

(example: {"port": 1, "attenuation": 2})

measurement_type = dd.api.device_data.PlottingKwargs(

x_name="i",

y_name="v",

x_col="i",

y_col=["v"],

)

Upload measurements#

You can now upload measurement data.

This is a bare bones example, in a production setting, you can also add validation, logging, and error handling to ensure a smooth operation.

Every measurement you upload will trigger all the analysis that you defined in the design manifest.

wafer_set = set()

die_set = set()

NUMBER_OF_THREADS = 1 if "127" in dd.settings.dodata_url else dd.settings.n_threads

NUMBER_OF_THREADS = 1

NUMBER_OF_THREADS

1

if NUMBER_OF_THREADS == 1:

for path in tqdm(data_files):

wafer_id = path.parts[1]

die_x, die_y = path.parts[2].split("_")

r = dd.api.device_data.upload(

file=path,

project_id=PROJECT_ID,

wafer_id=wafer_id,

die_x=die_x,

die_y=die_y,

device_id=path.parts[3],

data_type="measurement",

plotting_kwargs=measurement_type,

)

wafer_set.add(wafer_id)

die_set.add(path.parts[2])

r.raise_for_status()

project_ids = []

device_ids = []

die_ids = []

die_xs = []

die_ys = []

wafer_ids = []

plotting_kwargs = []

data_types = []

for path in data_files:

device_id = path.parts[3]

die_id = path.parts[2]

die_x, die_y = die_id.split("_")

wafer_id = path.parts[1]

device_ids.append(device_id)

die_ids.append(die_id)

die_xs.append(die_x)

die_ys.append(die_y)

wafer_ids.append(wafer_id)

plotting_kwargs.append(measurement_type)

project_ids.append(PROJECT_ID)

data_types.append("measurement")

if NUMBER_OF_THREADS > 1:

dd.device_data.upload_multi(

files=data_files,

project_ids=project_ids,

wafer_ids=wafer_ids,

die_xs=die_xs,

die_ys=die_ys,

device_ids=device_ids,

data_types=data_types,

plotting_kwargs=plotting_kwargs,

progress_bar=True,

)

wafer_set = set(wafer_ids)

die_set = set(die_ids)

print(wafer_set)

print(die_set)

print(len(die_set))

{'334abd342zuq', '6d4c615ff105', '2eq221eqewq2'}

{'1_-1', '0_0', '2_-1', '1_0', '-2_1', '-1_-2', '1_-2', '-1_2', '0_1', '1_1', '-2_0', '-1_-1', '2_1', '1_2', '0_-1', '-1_0', '-1_1', '2_0', '-2_-1', '0_-2', '0_2'}

21

Analysis#

You can run analysis at 3 different levels. For example to extract:

Device: averaged power envelope over certain number of samples.

Die: fit the propagation loss as a function of length.

Wafer: Define the Upper and Lower Spec limits for Known Good Die (KGD)

To upload custom analysis functions to the DoData server, follow these simplified guidelines:

Input:

Begin with a unique identifier (device_data_pkey, die_pkey, wafer_pkey) as the first argument.

Add necessary keyword arguments for the analysis.

Output: Dictionary

output: Return a simple, one-level dictionary. All values must be serializable. Avoid using numpy or pandas; convert to lists if needed.

summary_plot: Provide a summary plot, either as a matplotlib figure or io.BytesIO object.

attributes: Add a serializable dictionary of the analysis settings.

device_data_pkey/die_pkey/wafer_pkey: Include the used identifier (device_data_pkey, die_pkey, wafer_pkey).

Device analysis#

You can either trigger analysis automatically by defining it in the design manifest, using the UI or using the Python DoData library.

from IPython.display import Code, display, Image

import doplaydo.dodata as dd

display(Code(dd.config.Path.analysis_functions_device_iv_resistance))

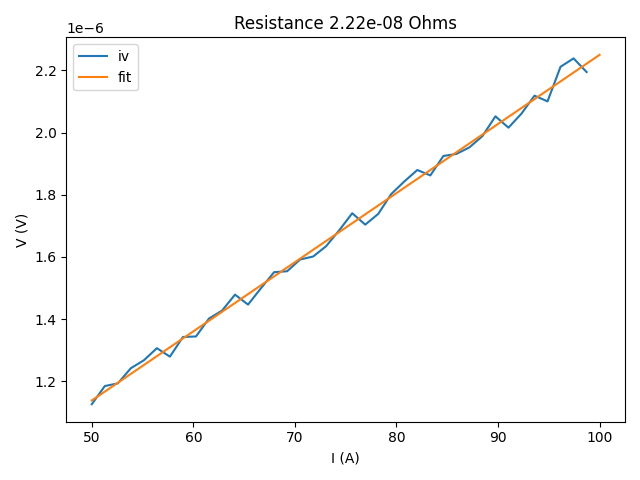

"""Fits resistance from an IV curve."""

from typing import Any

import numpy as np

from matplotlib import pyplot as plt

import doplaydo.dodata as dd

def run(

device_data_pkey: int,

min_i: float | None = None,

max_i: float | None = None,

xkey: str = "i",

ykey: str = "v",

) -> dict[str, Any]:

"""Fits resistance from an IV curve.

Args:

device_data_pkey: pkey of the device data to analyze.

min_i: minimum intensity. If None, the minimum intensity is the minimum of the data.

max_i: maximum intensity. If None, the maximum intensity is the maximum of the data.

xkey: key of the x data.

ykey: key of the y data.

"""

data = dd.get_data_by_pkey(device_data_pkey)

if xkey not in data:

raise ValueError(

f"Device data with pkey {device_data_pkey} does not have xkey {xkey!r}."

)

if ykey not in data:

raise ValueError(

f"Device data with pkey {device_data_pkey} does not have ykey {ykey!r}."

)

i = data[xkey].values

v = data[ykey].values

min_i = min_i or np.min(i)

max_i = max_i or np.max(i)

i2 = i[(i > min_i) & (i < max_i)]

v2 = v[(i > min_i) & (i < max_i)]

i, v = i2, v2

p = np.polyfit(i, v, deg=1)

i_fit = np.linspace(min_i, max_i, 3)

v_fit = np.polyval(p, i_fit)

resistance = p[0]

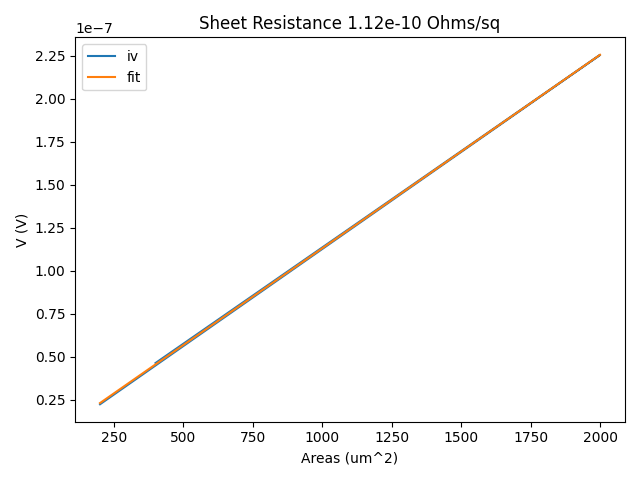

fig = plt.figure()

plt.plot(i, v, label="iv", zorder=0)

plt.plot(i_fit, v_fit, label="fit", zorder=1)

plt.xlabel("I (A)")

plt.ylabel("V (V)")

plt.legend()

plt.title(f"Resistance {resistance:.2e} Ohms")

plt.close()

return dict(

output={

"resistance": float(resistance),

},

summary_plot=fig,

device_data_pkey=device_data_pkey,

)

if __name__ == "__main__":

d = run(79366)

print(d["output"]["resistance"])

device_data, df = dd.get_data_by_query([dd.Project.project_id == PROJECT_ID], limit=1)[

0

]

device_data.pkey

6258

response = dd.api.analysis_functions.validate(

analysis_function_id="device_iv",

function_path=dd.config.Path.analysis_functions_device_iv_resistance,

test_model_pkey=device_data.pkey,

target_model_name="device_data",

parameters=dict(min_i=50),

)

Image(response.content)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 20:59:11 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"resistance": 2.2238565488800226e-08}', '_attributes': '{}', '_device_data_pkey': '6258', 'strict-transport-security': 'max-age=63072000'})

try:

response = dd.api.analysis_functions.validate(

analysis_function_id="device_iv",

function_path=dd.config.Path.analysis_functions_device_iv_resistance,

test_model_pkey=device_data.pkey,

target_model_name="device_data",

parameters=dict(wrong_variable=50),

)

print(response.text)

except HTTPStatusError:

pass

dd.api.analysis_functions.validate_and_upload(

analysis_function_id="device_iv",

function_path=dd.config.Path.analysis_functions_device_iv_resistance,

test_model_pkey=device_data.pkey,

target_model_name="device_data",

)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 20:59:12 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"resistance": 2.252319643371782e-08}', '_attributes': '{}', '_device_data_pkey': '6258', 'strict-transport-security': 'max-age=63072000'})

<Response [200 OK]>

Die Analysis#

You can define a state for all device data available and trigger on that state.

In the following example you will trigger a die analysis for 300, 500 and 800nm wide waveguides.

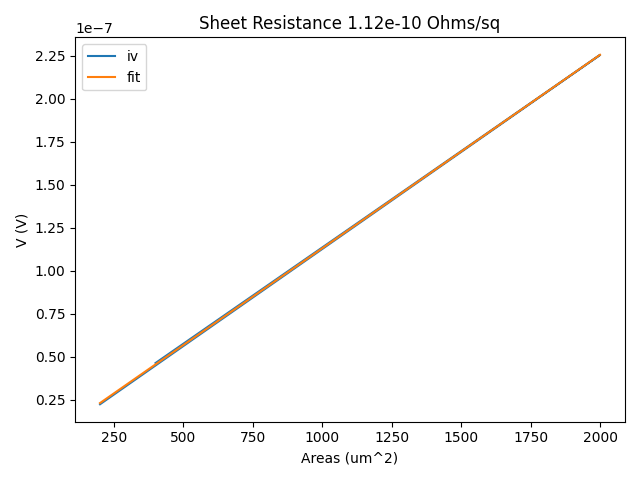

Code(dd.config.Path.analysis_functions_die_sheet_resistance)

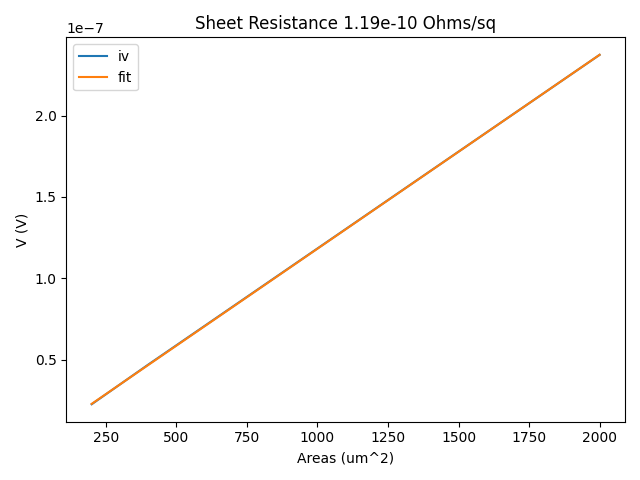

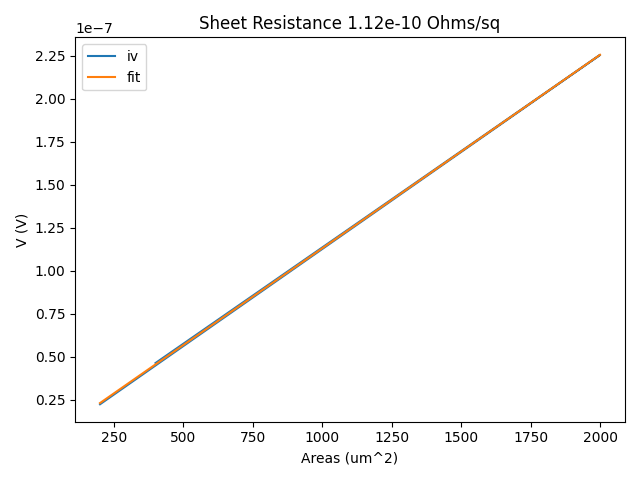

"""Fits sheet resistance from an IV curve and returns Sheet resistance Ohms/sq."""

from typing import Any

import numpy as np

from matplotlib import pyplot as plt

import doplaydo.dodata as dd

def run(

die_pkey: int,

width_key: str = "width_um",

length_key: str = "length_um",

length_value: float = 20,

) -> dict[str, Any]:

"""Fits sheet resistance from an IV curve and returns Sheet resistance Ohms/sq.

It assumes fixed length and sweeps width.

Args:

die_pkey: pkey of the device data to analyze.

width_key: key of the width attribute to filter by.

width_value: value of the width attribute to filter by.

length_key: key of the length attribute to filter by.

length_value: value of the length attribute to filter by.

"""

device_data_objects = dd.get_data_by_query(

[

dd.Die.pkey == die_pkey,

dd.attribute_filter(dd.Cell, length_key, length_value),

]

)

if not device_data_objects:

raise ValueError(

f"No device die found with die_pkey {die_pkey}, length_key {length_key!r}, length_value {length_value}"

)

resistances = []

widths_um = []

lengths_um = []

areas = []

for device_data, df in device_data_objects:

length_um = device_data.device.cell.attributes.get(length_key)

width_um = device_data.device.cell.attributes.get(width_key)

lengths_um.append(length_um)

widths_um.append(width_um)

i = df.i # type: ignore[arg-type, call-arg]

v = df.v # type: ignore[arg-type, call-arg]

p = np.polyfit(i, v, deg=1)

resistances.append(p[0])

area = length_um * width_um

areas.append(area)

p = np.polyfit(areas, resistances, deg=1)

sheet_resistance = p[0]

areas_fit = np.linspace(np.min(areas), np.max(areas), 3)

resistances_fit = np.polyval(p, areas_fit)

fig = plt.figure()

plt.plot(areas, resistances, label="iv", zorder=0)

plt.plot(areas_fit, resistances_fit, label="fit", zorder=1)

plt.xlabel("Areas (um^2)")

plt.ylabel("V (V)")

plt.legend()

plt.title(f"Sheet Resistance {sheet_resistance:.2e} Ohms/sq")

return dict(

output={

"sheet_resistance": float(sheet_resistance),

},

summary_plot=fig,

die_pkey=die_pkey,

)

if __name__ == "__main__":

d = run(11)

print(d["output"]["sheet_resistance"])

device_data, df = dd.get_data_by_query([dd.Project.project_id == PROJECT_ID], limit=1)[

0

]

device_data.die.pkey

711

die_pkey = device_data.die.pkey

response = dd.api.analysis_functions.validate(

analysis_function_id="die_iv_sheet_resistance",

function_path=dd.config.Path.analysis_functions_die_sheet_resistance,

test_model_pkey=die_pkey,

target_model_name="die",

)

print(response.headers)

Image(response.content)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 20:59:12 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"sheet_resistance": 1.1925574813033346e-10}', '_attributes': '{}', '_die_pkey': '711', 'strict-transport-security': 'max-age=63072000'})

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 20:59:12 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"sheet_resistance": 1.1925574813033346e-10}', '_attributes': '{}', '_die_pkey': '711', 'strict-transport-security': 'max-age=63072000'})

dd.api.analysis_functions.validate_and_upload(

analysis_function_id="die_iv_sheet_resistance",

function_path=dd.config.Path.analysis_functions_die_sheet_resistance,

test_model_pkey=die_pkey,

target_model_name="die",

)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 20:59:13 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"sheet_resistance": 1.1925574813033346e-10}', '_attributes': '{}', '_die_pkey': '711', 'strict-transport-security': 'max-age=63072000'})

<Response [200 OK]>

database_dies = []

analysis_function_id = "die_iv_sheet_resistance"

widths_um = dm.width_um.unique()

widths_um

array([ 10., 20., 100.])

lengths_um = dm.length_um.unique()

lengths_um

array([20])

length_um = lengths_um[0]

analysis_function_id = "die_iv_sheet_resistance"

parameters = [{"width_key": "width_um", "length_key": "length_um"}] * len(wafer_set)

dd.analysis.trigger_die_multi(

project_id=PROJECT_ID,

analysis_function_id=analysis_function_id,

wafer_ids=wafer_set,

die_xs=die_xs,

die_ys=die_ys,

progress_bar=True,

parameters=parameters,

n_threads=2,

)

plots = dd.analysis.get_die_analysis_plots(

project_id=PROJECT_ID, wafer_id=wafer_ids[0], die_x=0, die_y=0

)

len(plots)

3

for plot in plots:

display(plot)

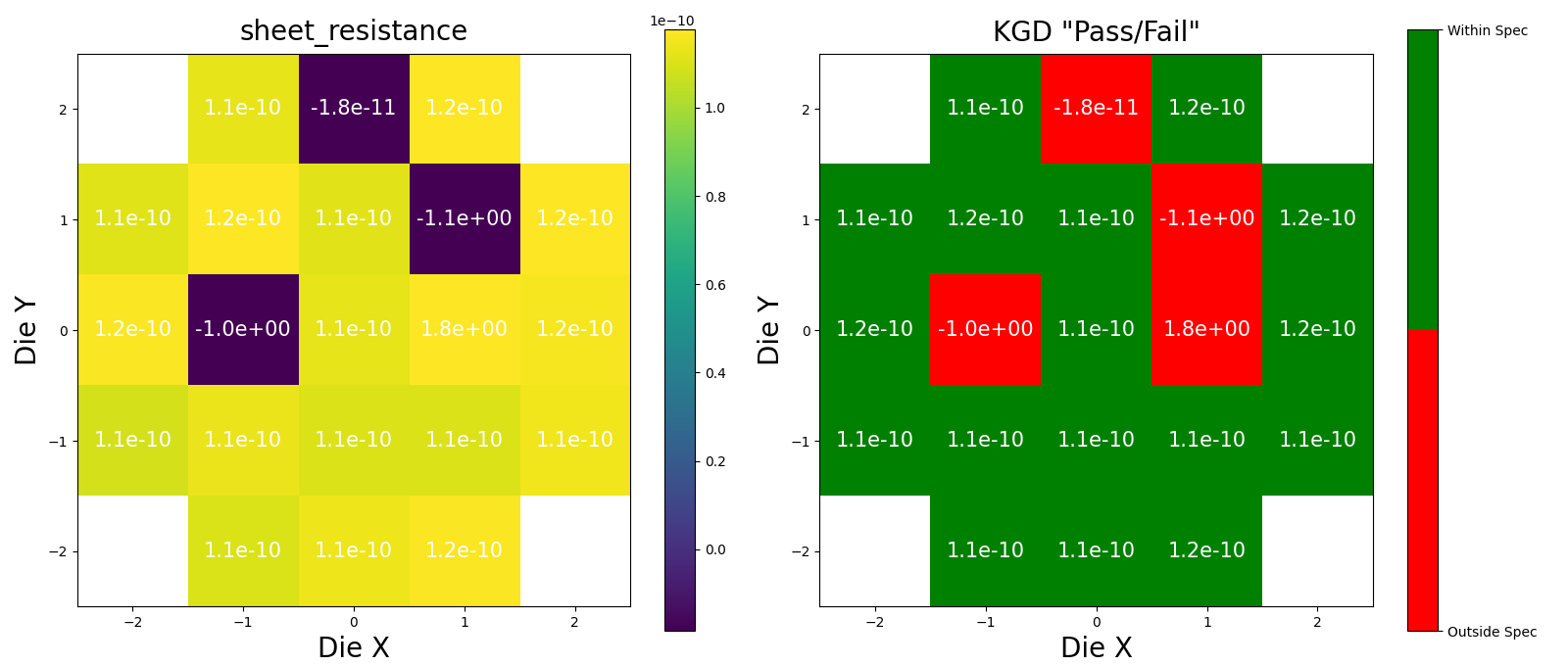

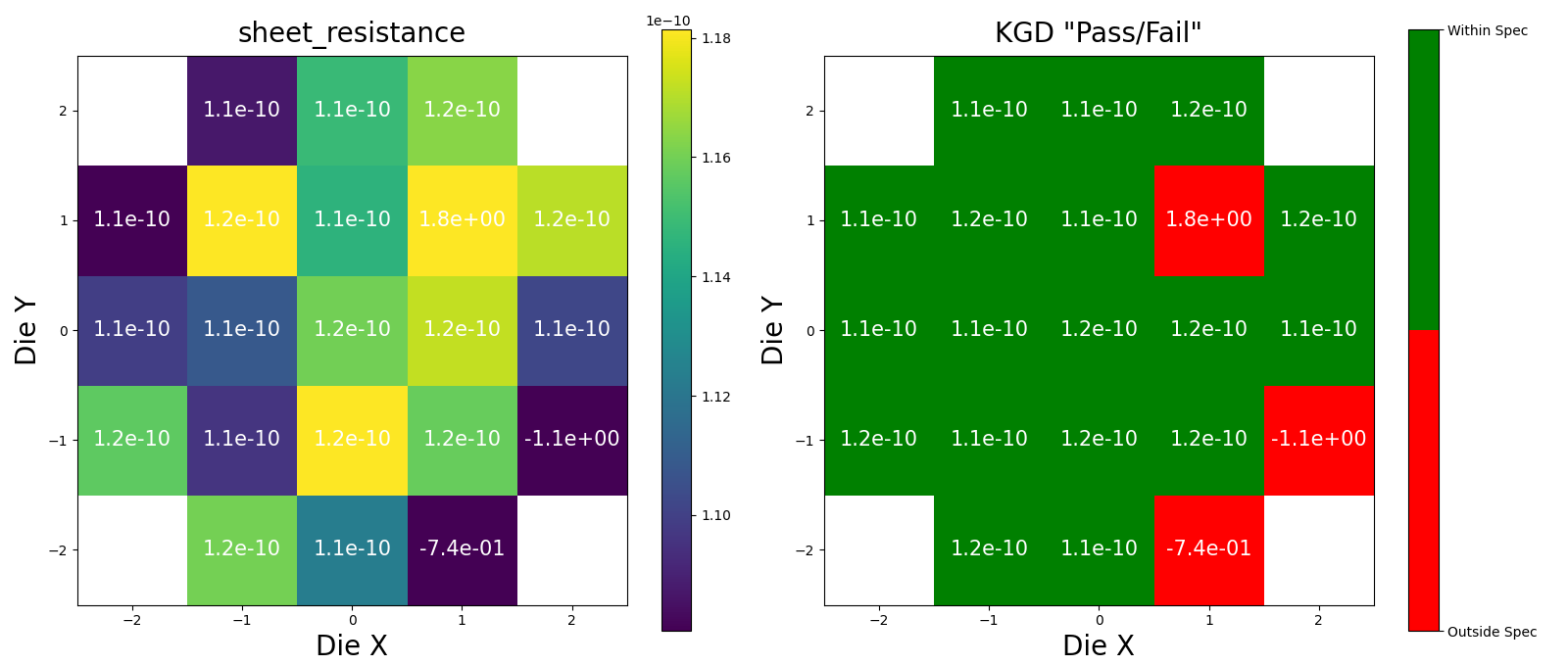

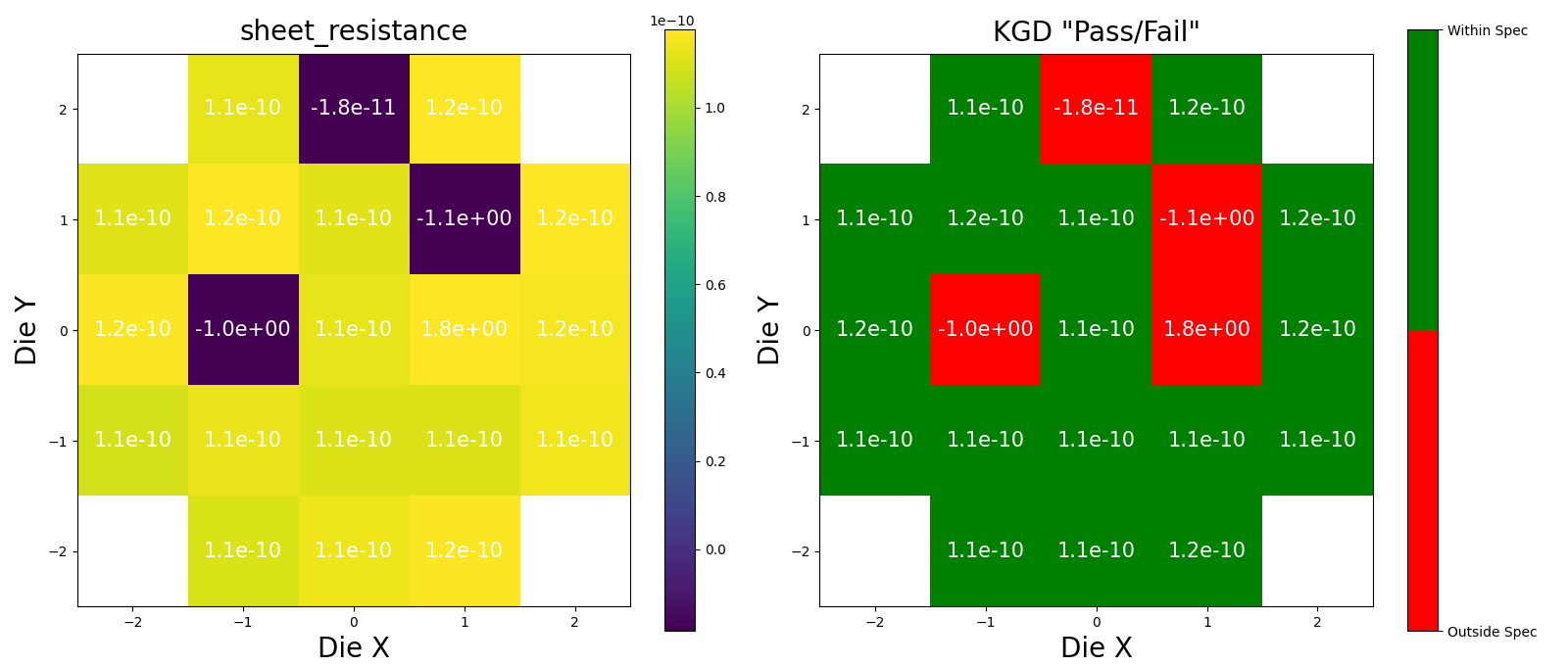

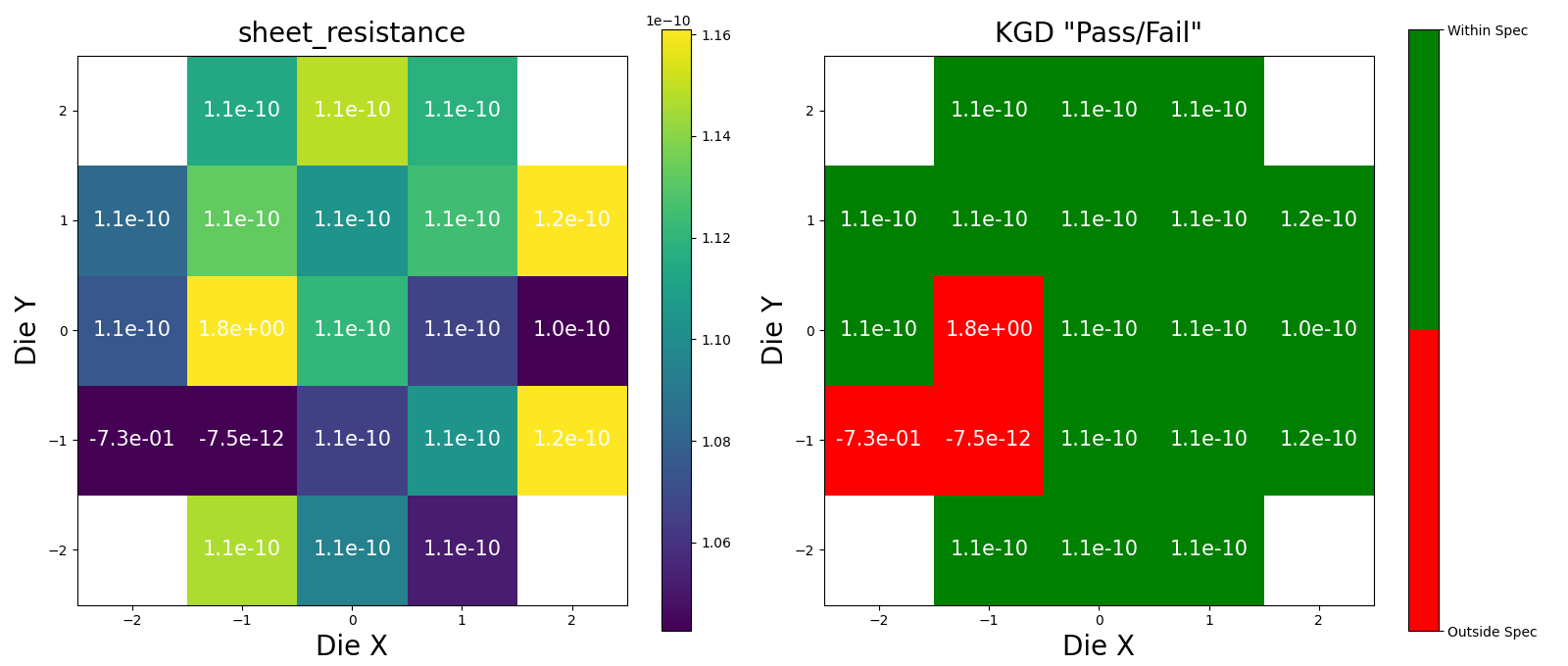

Wafer analysis#

Lets Define the Upper and Lower Spec limits for Known Good Die (KGD).

Lets find a wafer pkey for this project, so that we can trigger the die analysis on it.

device_id

'resistance_resistance_sheet_W10_0_52500'

device_data_objects = dd.get_data_objects_by_query(

[

dd.Project.project_id == PROJECT_ID,

dd.Device.device_id == device_data.device.device_id,

],

limit=1,

)

wafer_pkey = device_data_objects[0].die.wafer.pkey

wafer_id = device_data_objects[0].die.wafer.wafer_id

wafer_id

'6d4c615ff105'

upper_spec = 1e-8

lower_spec = 1e-12

parameters = {

"upper_spec": upper_spec,

"lower_spec": lower_spec,

"analysis_function_id": "die_iv_sheet_resistance",

"metric": "sheet_resistance",

"key": None,

"scientific_notation": True,

"decimal_places": 1,

"fontsize_die": 15,

"percentile_low": 10,

"percentile_high": 90,

}

response = dd.api.analysis_functions.validate(

analysis_function_id="wafer_loss_cutback",

function_path=dd.config.Path.analysis_functions_wafer_loss_cutback,

test_model_pkey=wafer_pkey,

target_model_name="wafer",

parameters=parameters,

)

Image(response.content)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 21:00:03 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"losses": [1.1444794916524989e-10, 1.1925574813033346e-10, 1.1477243202351215e-10, 1.1557340761645108e-10, 1.166143070774012e-10, 1.1740007894987294e-10, -1.0477498208914127, 1.1352237691122143e-10, 1.1152518587735754e-10, 1.1266748491998563e-10, 1.1043209739145013e-10, 1.1239849941179067e-10, -1.0518870403933187, 1.1007547195133378e-10, -1.8472575024307858e-11, 1.103873940866553e-10, 1.1101539764131735e-10, 1.7855487290707313, 1.1766729149315594e-10, 1.170648587149666e-10, 1.0872768611341532e-10]}', '_attributes': '{}', '_wafer_pkey': '24', 'strict-transport-security': 'max-age=63072000'})

response = dd.api.analysis_functions.validate_and_upload(

analysis_function_id="wafer_loss_cutback",

function_path=dd.config.Path.analysis_functions_wafer_loss_cutback,

test_model_pkey=wafer_pkey,

target_model_name="wafer",

parameters=parameters,

)

Headers({'server': 'nginx/1.22.1', 'date': 'Fri, 28 Feb 2025 21:00:04 GMT', 'content-type': 'image/png', 'transfer-encoding': 'chunked', 'connection': 'keep-alive', '_output': '{"losses": [1.1444794916524989e-10, 1.1925574813033346e-10, 1.1477243202351215e-10, 1.1557340761645108e-10, 1.166143070774012e-10, 1.1740007894987294e-10, -1.0477498208914127, 1.1352237691122143e-10, 1.1152518587735754e-10, 1.1266748491998563e-10, 1.1043209739145013e-10, 1.1239849941179067e-10, -1.0518870403933187, 1.1007547195133378e-10, -1.8472575024307858e-11, 1.103873940866553e-10, 1.1101539764131735e-10, 1.7855487290707313, 1.1766729149315594e-10, 1.170648587149666e-10, 1.0872768611341532e-10]}', '_attributes': '{}', '_wafer_pkey': '24', 'strict-transport-security': 'max-age=63072000'})

parameters_list = [parameters]

for wafer in tqdm(wafer_set):

for params in parameters_list:

r = dd.analysis.trigger_wafer(

project_id=PROJECT_ID,

wafer_id=wafer,

analysis_function_id="wafer_loss_cutback",

parameters=params,

)

if r.status_code != 200:

raise requests.HTTPError(r.text)

for wafer_id in wafer_set:

plots = dd.analysis.get_wafer_analysis_plots(

project_id=PROJECT_ID, wafer_id=wafer_id, target_model="wafer"

)

for plot in plots:

display(plot)

Wafer and Die comparison#

You can compare any dies or wafers, as another type of aggregated analysis

filter_clauses = [dd.Project.project_id == PROJECT_ID]

wafers = dd.db.wafer.get_wafers_by_query(filter_clauses)

wafer_pkeys = set([w.pkey for w in wafers])

wafer_pkeys

{22, 23, 24}

wafer_ids = {w.wafer_id for w in wafers}

wafer_ids

{'2eq221eqewq2', '334abd342zuq', '6d4c615ff105'}

analysis_function_id = "die_iv_sheet_resistance"

filter_clauses = [dd.AnalysisFunction.analysis_function_id == analysis_function_id]

analyses_per_wafer = {}

for wafer_id in wafer_ids:

analyses_per_wafer[wafer_id] = dd.db.analysis.get_analyses_for_wafer(

project_id=PROJECT_ID,

wafer_id=wafer_id,

target_model="die",

filter_clauses=filter_clauses,

)

df = pd.DataFrame(

[

{

"die_pkey": analysis.die_pkey, # Correct access to die_pkey

"sheet_resistance": analysis.output[

"sheet_resistance"

], # Correct access to sheet_resistance

"wafer_id": wafer_id, # Wafer key as part of the data

}

for wafer_id, analyses in analyses_per_wafer.items() # First iterate over dict items

for analysis in analyses # Then iterate over each analysis in the list of analyses

]

)

df.head()

| die_pkey | sheet_resistance | wafer_id | |

|---|---|---|---|

| 0 | 671 | 1.123394e-10 | 334abd342zuq |

| 1 | 671 | 1.123394e-10 | 334abd342zuq |

| 2 | 671 | 1.123394e-10 | 334abd342zuq |

| 3 | 690 | 1.163631e-10 | 334abd342zuq |

| 4 | 690 | 1.163631e-10 | 334abd342zuq |

dict(analyses_per_wafer[wafer_id][0])

{'parameters': {'width_key': 'width_um',

'length_key': 'length_um',

'length_value': 20},

'summary_plot': 'summary_plot.png',

'is_latest': True,

'input_hash': '',

'device_data_pkey': None,

'die_pkey': 650,

'wafer_pkey': None,

'pkey': 7844,

'timestamp': datetime.datetime(2025, 2, 28, 20, 59, 46, 111632),

'output': {'sheet_resistance': 1.0945353617369346e-10},

'attributes': {},

'analysis_function_pkey': 9,

'analysis_function': AnalysisFunction(analysis_function_id='die_iv_sheet_resistance', hash='ed7a961c3efb2c2841507934c5fca37de375799dbcd2fc6d857e4f8e447731ca', target_model=<AnalysisFunctionTargetModel.die: 'die'>, timestamp=datetime.datetime(2025, 2, 28, 9, 51, 25, 112197), pkey=9, version=1, function_path='analysis_functions/die/die_iv_sheet_resistance/1/run.py', test_target_model_pkey=149)}

def remove_outliers(df, column_name="sheet_resistance"):

Q1 = df[column_name].quantile(0.25)

Q3 = df[column_name].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

return df[(df[column_name] >= lower_bound) & (df[column_name] <= upper_bound)]

filtered_df = remove_outliers(df)

def remove_outliers(df, column_name="sheet_resistance", min_val=None, max_val=None):

"""Remove outliers from a DataFrame based on a column value."""

if min_val is not None:

df = df[df[column_name] >= min_val]

if max_val is not None:

df = df[df[column_name] <= max_val]

return df

filtered_df = remove_outliers(df, min_val=1e-15, max_val=1e-3)

filtered_df.head()

| die_pkey | sheet_resistance | wafer_id | |

|---|---|---|---|

| 0 | 671 | 1.123394e-10 | 334abd342zuq |

| 1 | 671 | 1.123394e-10 | 334abd342zuq |

| 2 | 671 | 1.123394e-10 | 334abd342zuq |

| 3 | 690 | 1.163631e-10 | 334abd342zuq |

| 4 | 690 | 1.163631e-10 | 334abd342zuq |

import plotly.express as px

fig = px.box(

df,

x="wafer_id",

y="sheet_resistance",

title="Box Plot without Removing Outliers",

color="wafer_id", # This line will assign a different color to each box based on 'wafer_pkey'

)

fig.show()

fig = px.box(

filtered_df,

x="wafer_id",

y="sheet_resistance",

title="Box Plot after Removing Outliers",

color="wafer_id", # This line will assign a different color to each box based on 'wafer_pkey'

)

fig.show()

import plotly.express as px

# Assuming 'filtered_df' is your DataFrame that has been filtered to remove outliers.

fig = px.box(

filtered_df,

x="wafer_id",

y="sheet_resistance",

color="wafer_id", # Assign a different color to each box based on 'wafer_pkey'.

title="Box Plot after Removing Outliers",

category_orders={

"wafer_id": sorted(filtered_df["wafer_id"].unique())

}, # Sort the x-axis categories

)

# Update the style of the boxes to resemble Seaborn's aesthetic and add transparency

fig.update_traces(marker=dict(opacity=0.6), line=dict(width=2), boxmean=True)

# Update layout for a cleaner look, akin to Seaborn, and add grid lines

fig.update_layout(

template="simple_white",

xaxis_title="Wafer ID",

yaxis_title="Sheet Resistance",

plot_bgcolor="rgba(0,0,0,0)", # Transparent background

yaxis=dict(

gridcolor="rgba(128,128,128,0.5)", # Light gray grid lines with transparency

showgrid=True, # Ensure grid lines are shown

zerolinecolor="rgba(128,128,128,0.5)", # Light gray zero line with transparency

),

xaxis=dict(

gridcolor="rgba(128,128,128,0.5)", # Light gray grid lines with transparency

showgrid=True, # Ensure grid lines are shown

zerolinecolor="rgba(128,128,128,0.5)", # Light gray zero line with transparency

),

)

fig.show()